Forward-Thinking Assessment in the Era of Artificial Intelligence:

Strategies to facilitate deep learning

CONCERNS WITH ACADEMIC DISHONESTY have intensified with the advance of artificial intelligence (AI) technologies. Now, students can enter essay questions into bot-technology, like ChatGPT, to generate text-based responses that can appear to be authentic student work. While these AI bots cannot generate novel or creative ideas, they can synthesize existing knowledge and organize it into logical arguments.

We are now entering what we would call the third epoch of academic integrity. The first relates to the period preceding digital technology, the second coincides with the gradual use of Information Communication Technology (ICT), and the current epoch includes advanced and responsive ICT including AI applications. In many respects, these AI applications have ushered in a new age of plagiarism and cheating (Xiao et al., 2022). So, what should educators do next?

Cheating and artificial intelligence

Estimates of cheating vary widely across national contexts and sectors. For example, more than 50 percent of high school students in the United States reported some form of cheating that could include copying an internet document to submit as part of an assignment and/or cheating during a test (Eaton & Hughes, 2022). Cheating in Canada is also reported by more than half of high school students, with higher percentages (73 percent) reported for written assignments (Eaton & Hughes). In both Canada and the U.S., the incidence rates for undergraduate students are significantly lower (approximately five percent), but are still a noteworthy issue. What is less known is how the recent launch of ChatGPT by OpenAI will impact cheating in both compulsory and higher education settings within and outside of Canada. Perhaps in recognition of this potential issue, OpenAI’s terms of use state that “you must be at least 13 years old to use the Services. If you are under 18, you must have your parent or legal guardian’s permission to use the Services” (OpenAI, 2023).

The ability of popular plagiarism detection tools to identify cheating using ChatGPT remains a formidable challenge. For example, one study found that 50 essays generated using ChatGPT were able to generate sophisticated texts that were able to evade the traditional check software (Khalil & Er, 2023). In other studies, ChatGPT achieved the mean grade for the English reading comprehension national high school exam in the Netherlands (de Winter, 2023) and passed law school exams (Choi et al., 2023). Given that ChatGPT reached 100 million active users in January 2023, just two months after its launch, it is understandable why some have argued AI applications such as ChatGPT will precipitate a “tsunami effect” of changes to contemporary schooling (García-Peñalvo, 2023).

Current policy responses

Not surprisingly, there are opposing views on how to respond to ChatGPT and other AI language models. Some argue educators should embrace AI as a useful tool for teaching and learning, provided the application(s) is cited correctly (Willems, 2023). Others assert that additional training and resources are needed so that educators can better detect cheating (Abdelaal et al., 2019). Still others suggest that the educational challenges posed by AI described above must ultimately lead to assessment reforms (Cotton & Cotton, 2023) that will prevent students from using AI to complete their assignments, so that this threat is minimized. Even with likely further advances in cheating detection software, schools at all levels need to rethink their pedagogical and assessment approaches to respond to a continually evolving information world, one in which computers and technology are increasingly capable at synthesizing and organizing information.

Interestingly, some educators are actively exploring how to incorporate AI into their teaching and assessment methods. Fyfe (2022) describes a “pedagogical experiment” in which he asked students to generate content from a version of GPT-2 and intentionally weave this content throughout their final essay. Students were then asked to confront the availability of AI as a writing tool and reflect on the ethical use of emergent AI language models. This example suggests AI could be used to not only support student learning of core content, but extend critical digital literacy skills, too.

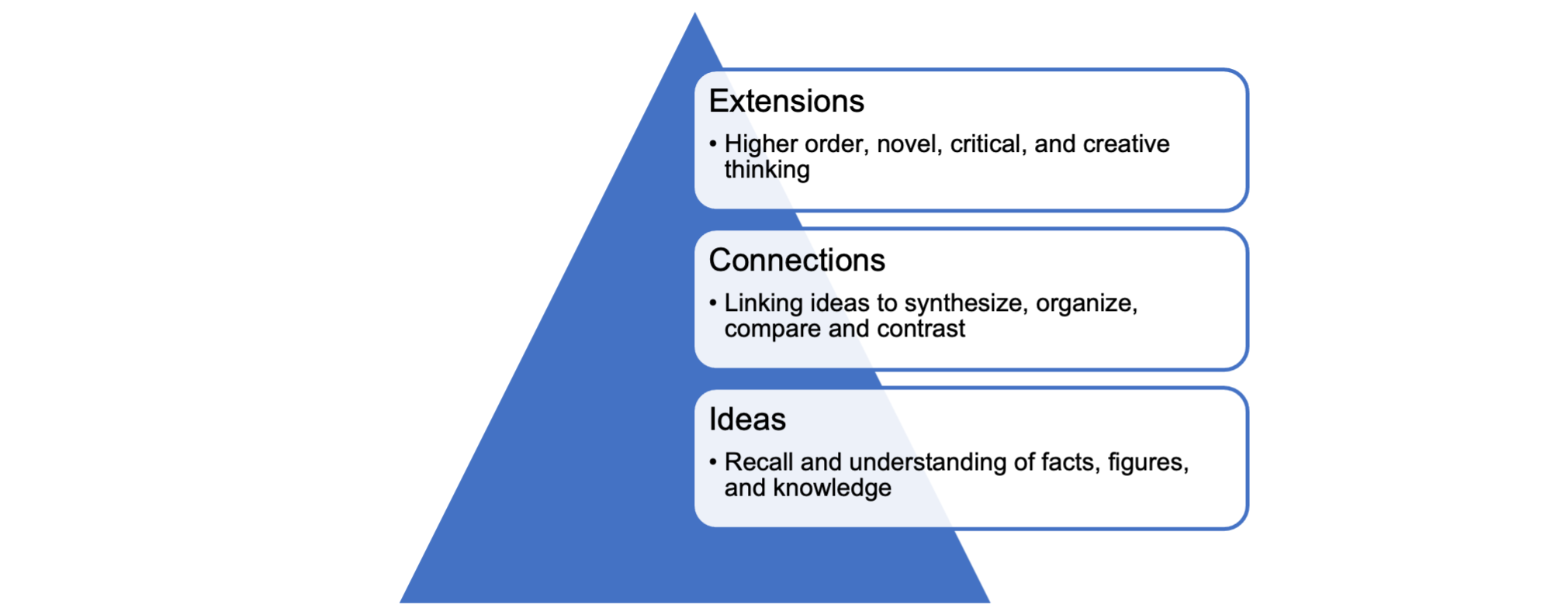

To put a finer point on this, when AI is integrated into teaching and learning, students’ engagement in their learning is higher, according to learning taxonomies. Take for instance a simple learning taxonomy like I.C.E. (Fostaty-Young & Wilson, 1995), where the “I” represents a student’s capacity to remember and work with basic content ideas (e.g. facts, figures, knowledge); the “C” represents a student’s ability to make connections between ideas (e.g. to organize ideas into a logical argument, to compare and contrast, to synthesize); and “E” represents a student’s capacity to make extensions. The “extensions” level of learning, which has also been referred to as “higher order thinking,” is where novel, critical, and creative outputs occur. At this point, AI is unable to achieve extensions, thus this becomes the role and function of students: to understand the ideas presented by texts, teachers, and AI bots and use them to establish novel extensions.

The challenge, of course, is that not all curriculum expectations require extension-level learning. Sometimes students need to learn and demonstrate their learning of basic ideas and connections. So, the question remains, how can AI and assessment work together to support all types of learning? Phrased differently, how can a teacher ensure their teaching and assessment practices are not susceptible to academic integrity issues?

Rethinking assessment with artificial intelligence in mind

There is little doubt that the emergence of ChatGPT represents the “tip of the iceberg” in terms of the use of AI in society and in education. In preparation for its growing presence in education, we provide six key practices to deter the misuse of AI in assessment and evaluation processes.

- Be explicit about the learning goals and the role of AI in assessments and assignments.

- Collaboratively establish assignment criteria with students.

- Engage in feedback cycles (peer, self, and teacher feedback) and include feedback drafts in final submission of work.

- Leverage performance tasks (e.g. presentations, videos, artistic work, etc.) in classroom assessment practices.

- Use authentic assessments (e.g. community-based activities, real-world tasks) whenever possible.

- Collaboratively grade assessments with students.

These six key practices have already proven to support more effective learning and assessment. Importantly, their continued use may either work with AI where appropriate, or deter the use of AI when necessary. For example, while we do not devalue the importance of learning goals that include foundational knowledge and conceptual understanding, the presence of AI creates an opportunity to identify more complex learning goals. These goals may build on teaching and learning that uses AI but then requires learners to evaluate or create extensions in their learning. Similarly, clarity of criteria helps students focus their learning, and the co-creation of criteria with students can lead to discussions regarding those aspects of an assignment or task that may use AI to supplement the work. Feedback cycles better reflect the processes we actually use to complete complex tasks, and the use of peer, self, and teacher feedback improves the quality of work and learning. While AI may be incorporated within early drafts, the revision process will require additional learner effort. Collectively, performance and authentic assessments require a high level of student engagement to demonstrate a number of integrated learning outcomes. As above, AI may supplement some of the foundational aspects of the work and/or task, but the final product will be illustrative of higher-order and critical thinking skills. Lastly, collaborative grading has a number of benefits, including greater assessment consistency, reduced bias, and, we would argue, a greater potential for detection of inappropriate use of AI and/or plagiarism.

Taken together, these practices not only make clear the role of AI in teaching, learning, and assessment, but also encourage students to be more agentic in the learning and assessment process. Effective learning requires students to engage actively, collaboratively, and orally in their learning and to demonstrate their learning through effective assessment. Assessment practices that are embedded within the learning process (formative assessment) will help reduce academic integrity concerns while encouraging more authentic and alternative assessments. The current debate around the presence of AI technologies such as Chat GPT must quickly shift from one of concerns about assessment integrity to one about how we use these technologies in our classrooms to enable our students to demonstrate more-complex and valued learning outcomes. In this respect, AI provides the necessary impetus to spur more forward-thinking assessment practices and policies within provincial and national education systems.

References

Abdelaal, E., Gamage, S. W., & Mills, J. E. (2019). Artificial Intelligence is a tool for cheating academic integrity. Proceedings of the AAEE2019 Conference. Artificial-Intelligence-Is-a-Tool-for-Cheating-Academic-Integrity.pdf (researchgate.net)

Choi, J. H., Hickman, K. E., et al. (2023). ChatGPT goes to law school. Minnesota Legal Studies Research Paper No. 23-03. https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4335905

Cotton, D. R. E., & Cotton, P. A. (2023). Chatting and cheating: Ensuring academic integrity in the era of ChatGPT. EdArXiv Reprints. https://edarxiv.org/mrz8h?trk=public_post_main-feed-card_reshare-text

de Winter, J. C. F. (2023). Can ChatGPT pass high school exams on English language comprehension. ResearchGate. https://www.researchgate.net/publication/366659237_Can_ChatGPT_pass_high_school_exams_on_English_Language_Comprehension

Eaton, S. E., & Hughes, J. C. (2022). Academic Integrity in Canada. Springer. https://library.oapen.org/bitstream/handle/20.500.12657/53333/1/978-3-030-83255-1.pdf#page=99

Fyfe, P. (2022). How to cheat on your final paper: Assigning AI for student writing. AI & Society. doi.org/10.1007/s00146-022-01397-z

García-Peñalvo, F. J. (2023). The perception of Artificial Intelligence in educational contexts after the launch of ChatGPT: disruption or panic? Ediciones Universidad de Salamanca. https://repositorio.grial.eu/handle/grial/2838

Khalil, M., & Er, K. (2023). Will ChatGPT get you caught? Rethinking of plagiarism detection. arXiv. doi.org/10.48550/arXiv.2302.04335

OpenAI. (2023). Terms of use. https://openai.com/policies/terms-of-use

Willems, J. (2023). ChatGPT at universities – the least of our concerns. SSRN Journal. https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4334162

Xiao, Y., Chatterjee, S., & Gehringer, E. (2022). A new era of plagiarism the danger of cheating using AI. Proceedings of the 20th International Conference on Information Technology Based Higher Education and Training (ITHET). https://ieeexplore.ieee.org/abstract/document/10031827